Neural Chip Design [1/4: Overview]

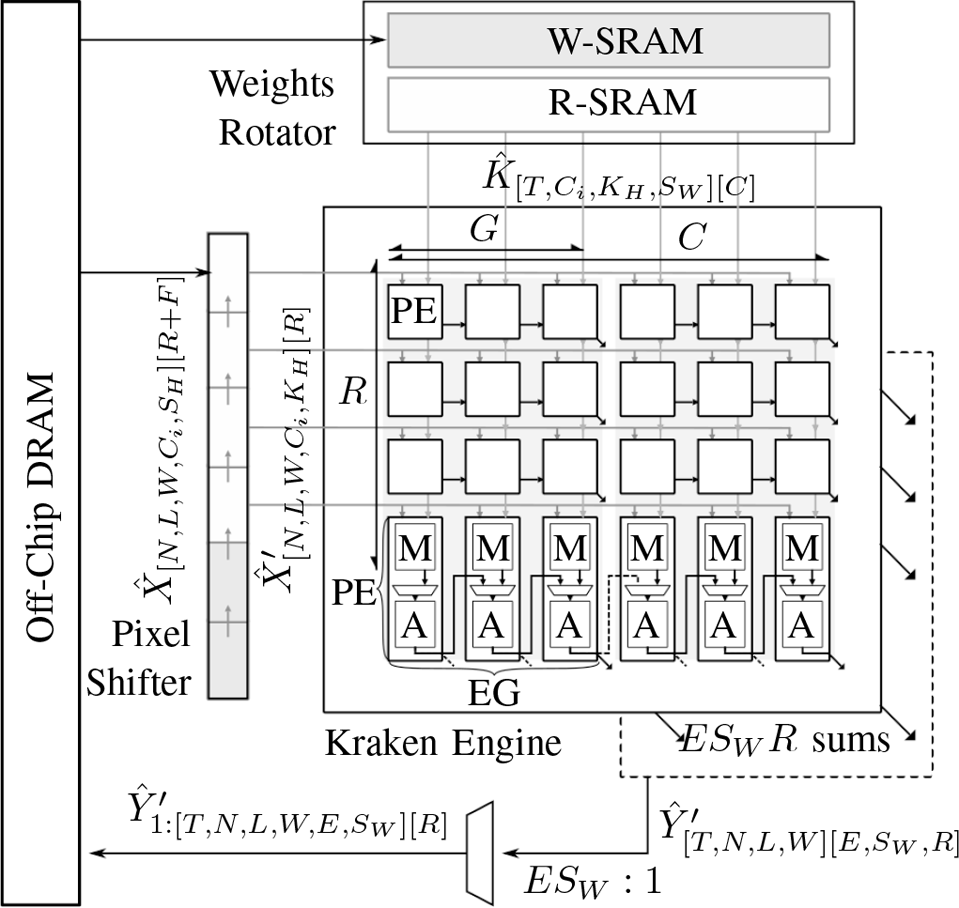

Kraken Engine

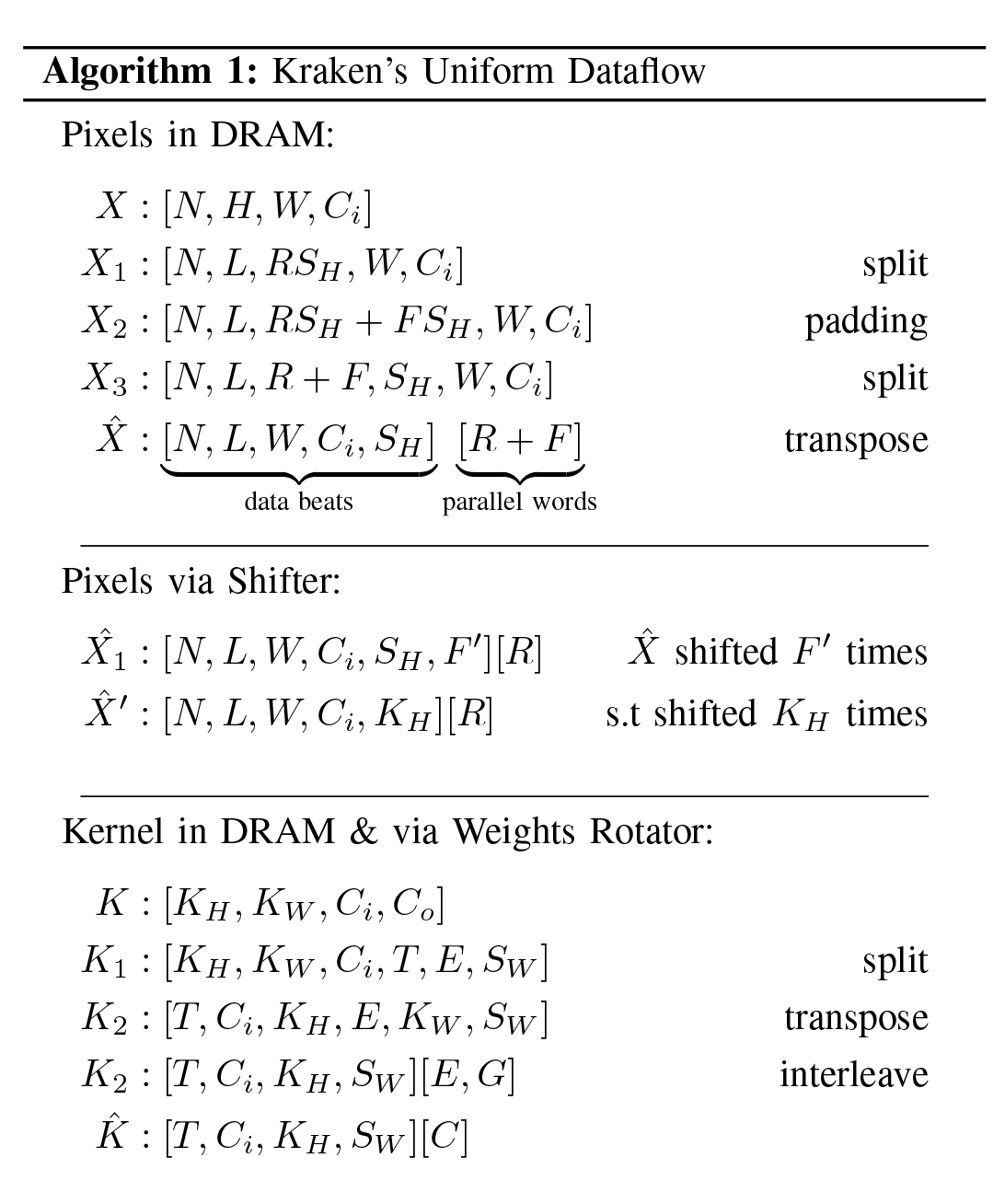

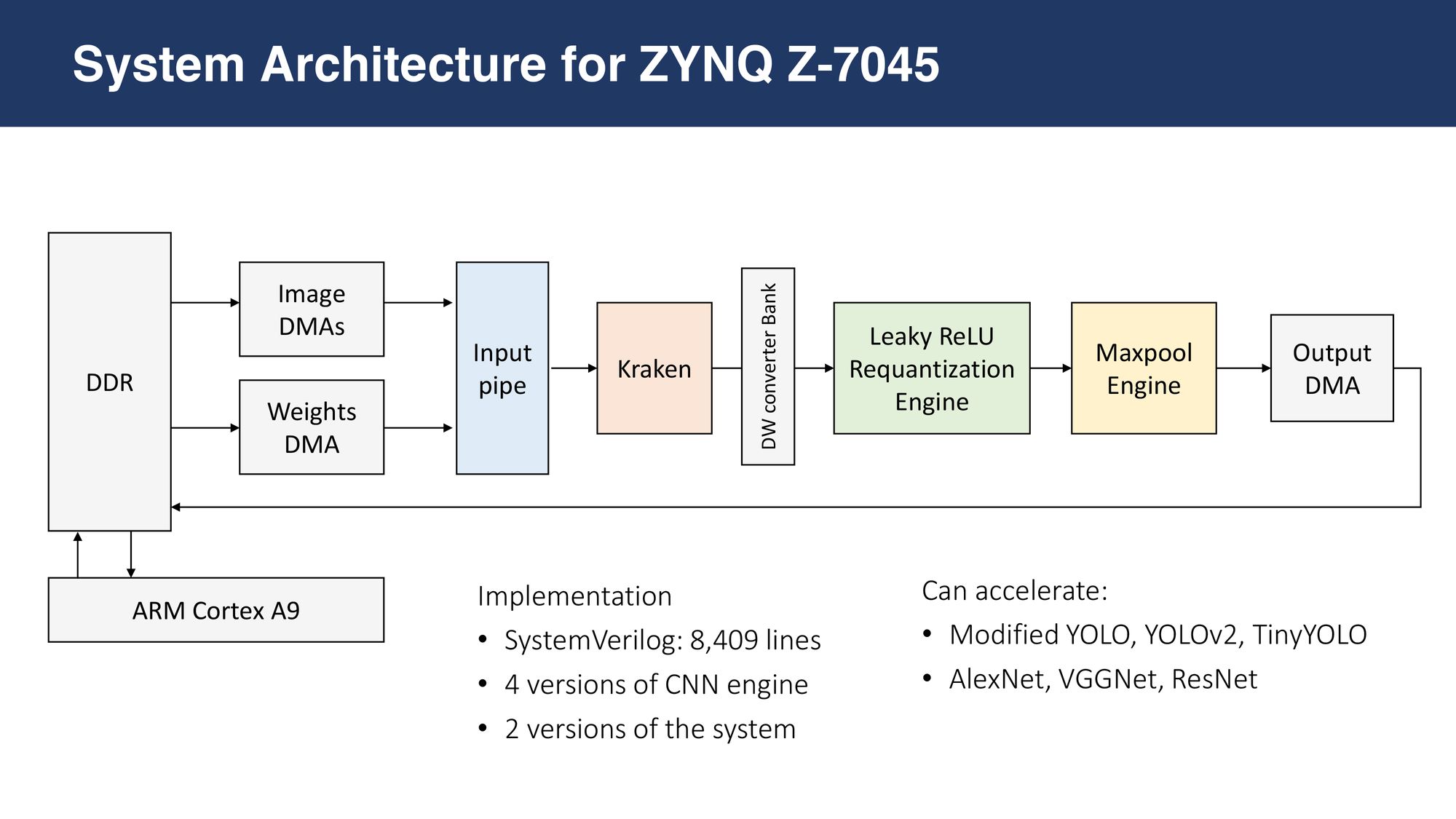

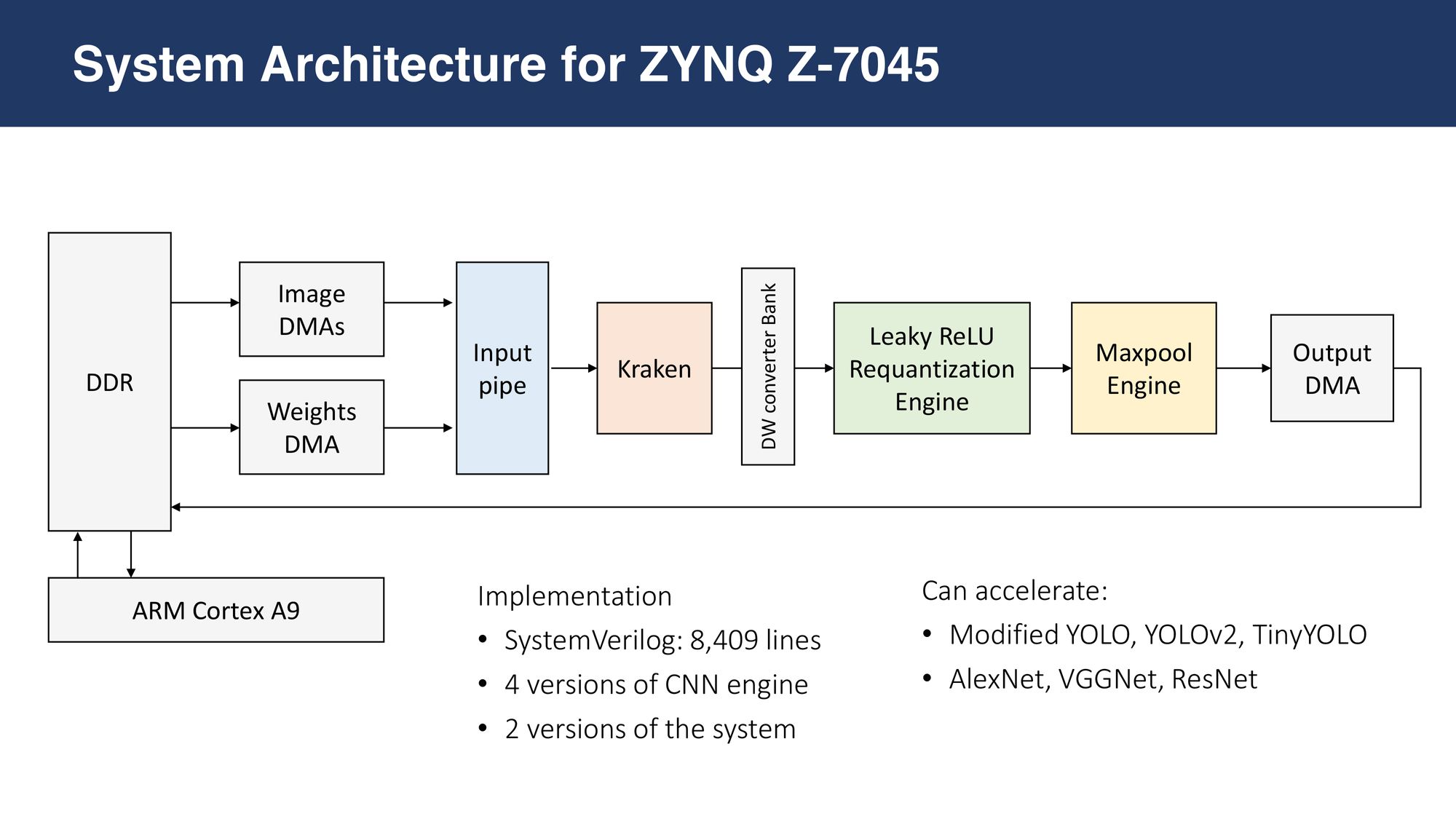

In March 2020, I started building Kraken as a personal, passion project, an engine capable of accelerating any kind of Deep Neural Network (DNN) with convolutional layers, fully-connected layers, and matrix products using a single uniform dataflow pattern while consuming remarkably low on-chip area. I synthesized it in 65-nm CMOS technology at 400 MHz, packing 672 MACs in 7.3 mm2, with a peak performance of 537.6 Gops.

When benchmarked on AlexNet [336.6 fps], VGG16 [17.5 fps], and ResNet-50 [64.2 fps] (yeah, those are old, but those are the widely used benchmarks), it outperforms the state-of-the-art ASIC architectures in terms of overall performance efficiency, DRAM accesses, arithmetic intensity, and throughput, with 5.8x more Gops/mm2 and 1.6x more Gops/W.

I submitted the design as a journal paper to IEEE TCAS-1, the #3 journal in the field, and it is currently under review. You can find the paper at the following link.

Technologies Used

Throughout the project, I wrote code, moved back and forth, and interfaced between several technologies, from different domains such as hardware (RTL), software, and machine vision.

- Python - Numpy, Tensorflow, PyTorch

- SystemVerilog - RTL, Testbenches

- TCL - Scripting the Vivado project, ASIC synthesis

- C++ - Firmware to control the system-on-chip

- Tools: Xilinx (Vivado, SDK), Cadence (Genus, Innovus)...

My Workflow

Through the Kraken project, I developed a workflow of 15 steps, which helps me to move between golden models in python, RTL designs & simulations in SystemVerilog, and firmware in C++. I have written them in detail as three more blog posts with code examples.

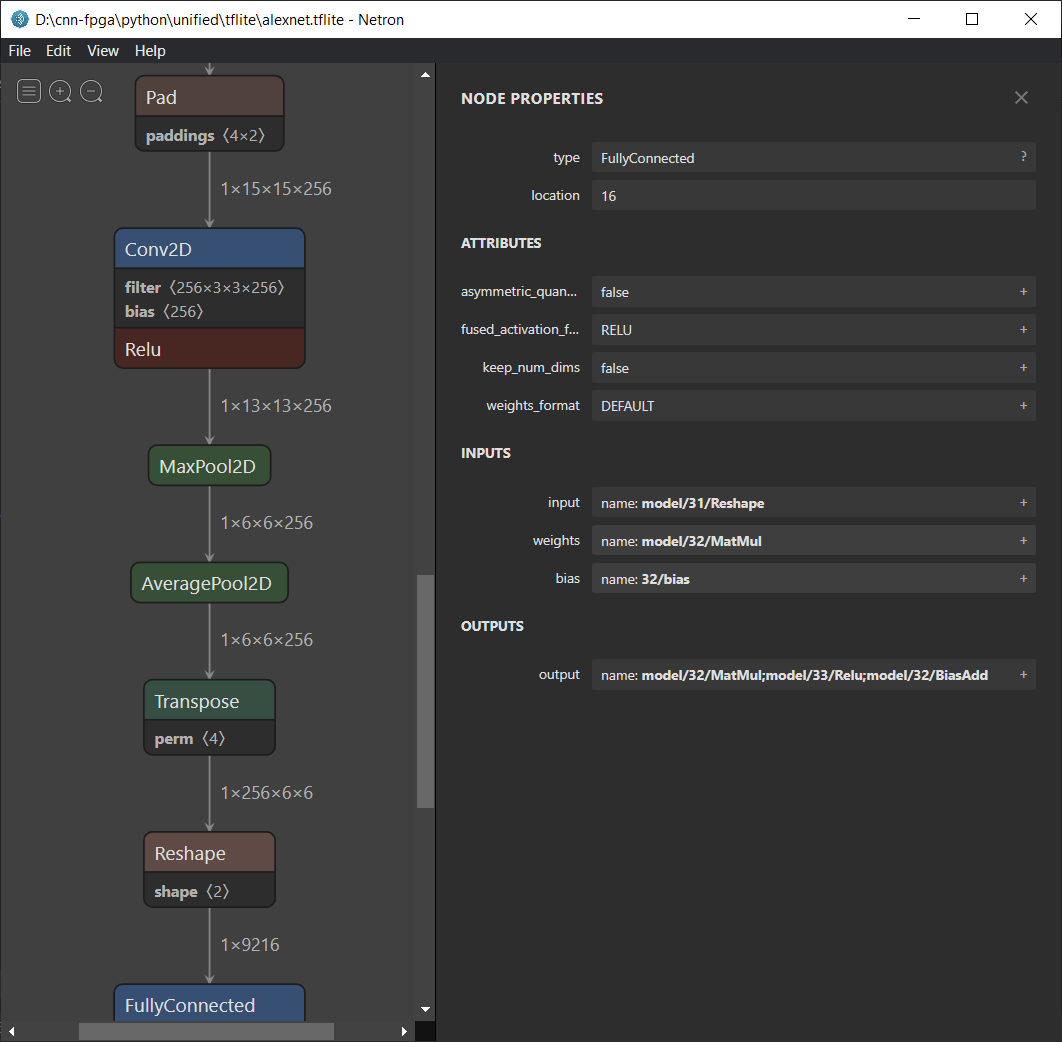

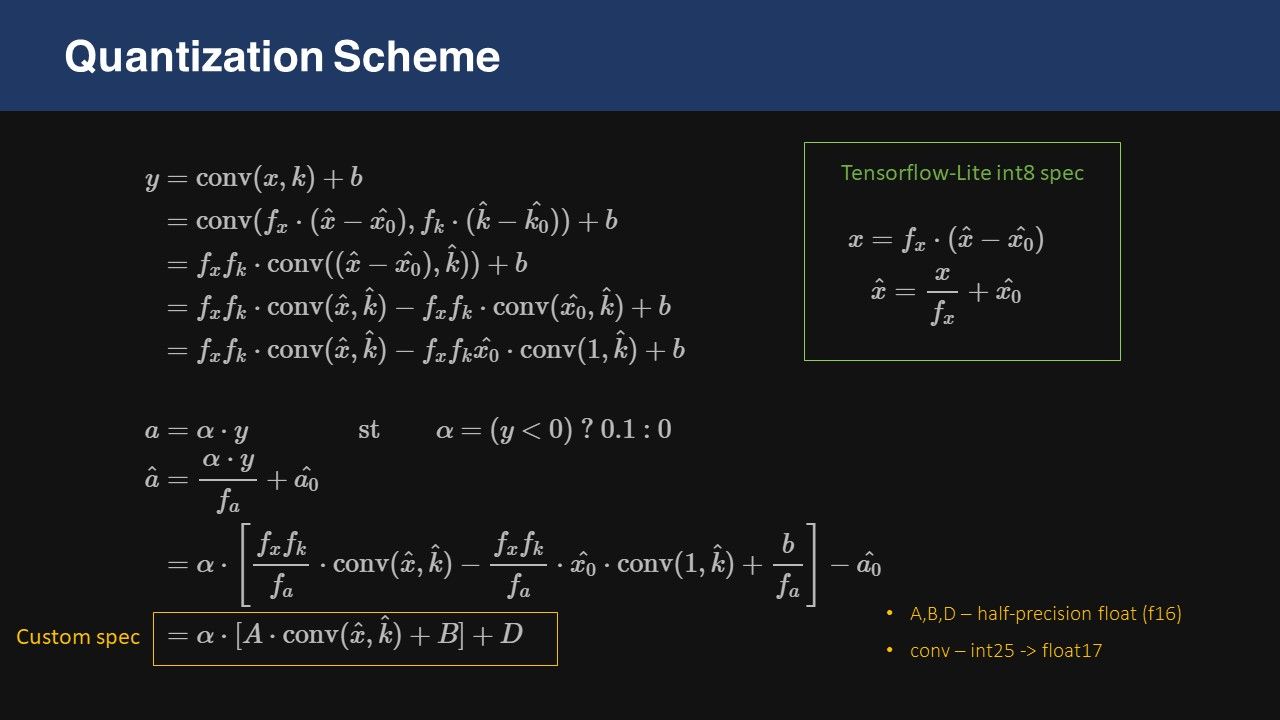

2/4: Golden Model

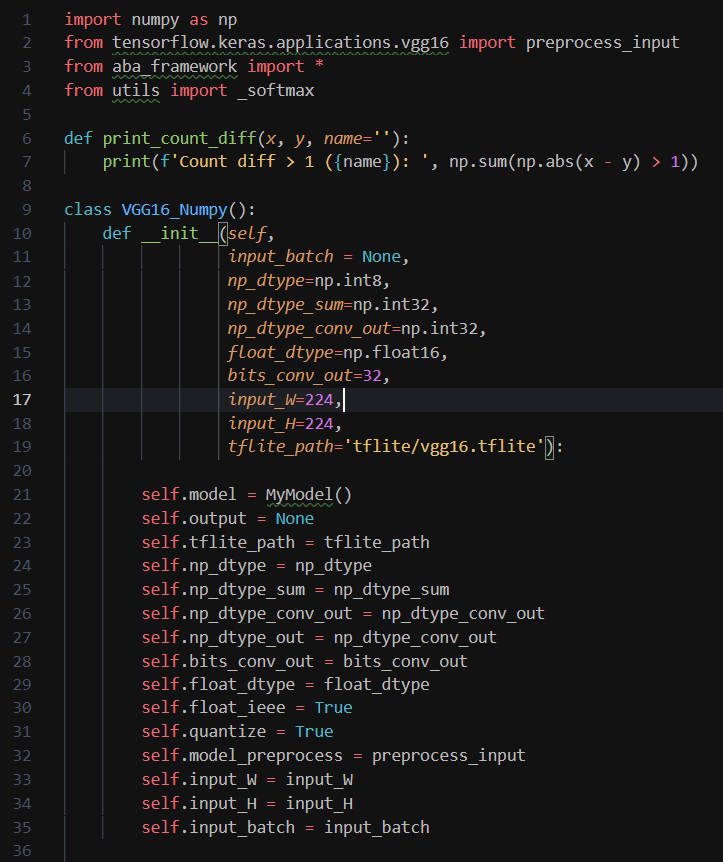

- PyTorch/TensorFlow: Explore DNN models, quantize & extract weights

- Golden Model in Python (NumPy stack): Custom OOP framework, process the weights, convert to custom datatypes

3/4: RTL Design & Verification

- Whiteboard: Design hardware modules, state machines

- RTL Design: SystemVerilog/Verilog for the whiteboard designs

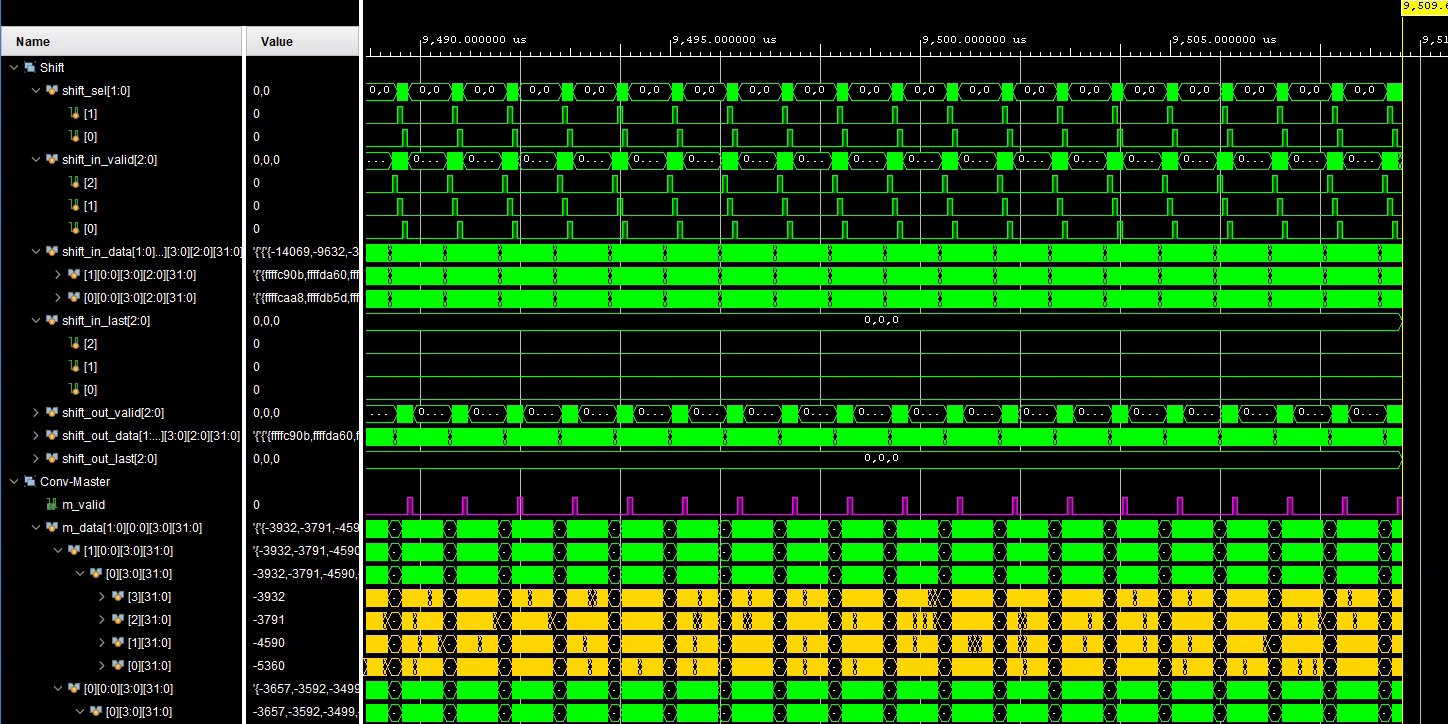

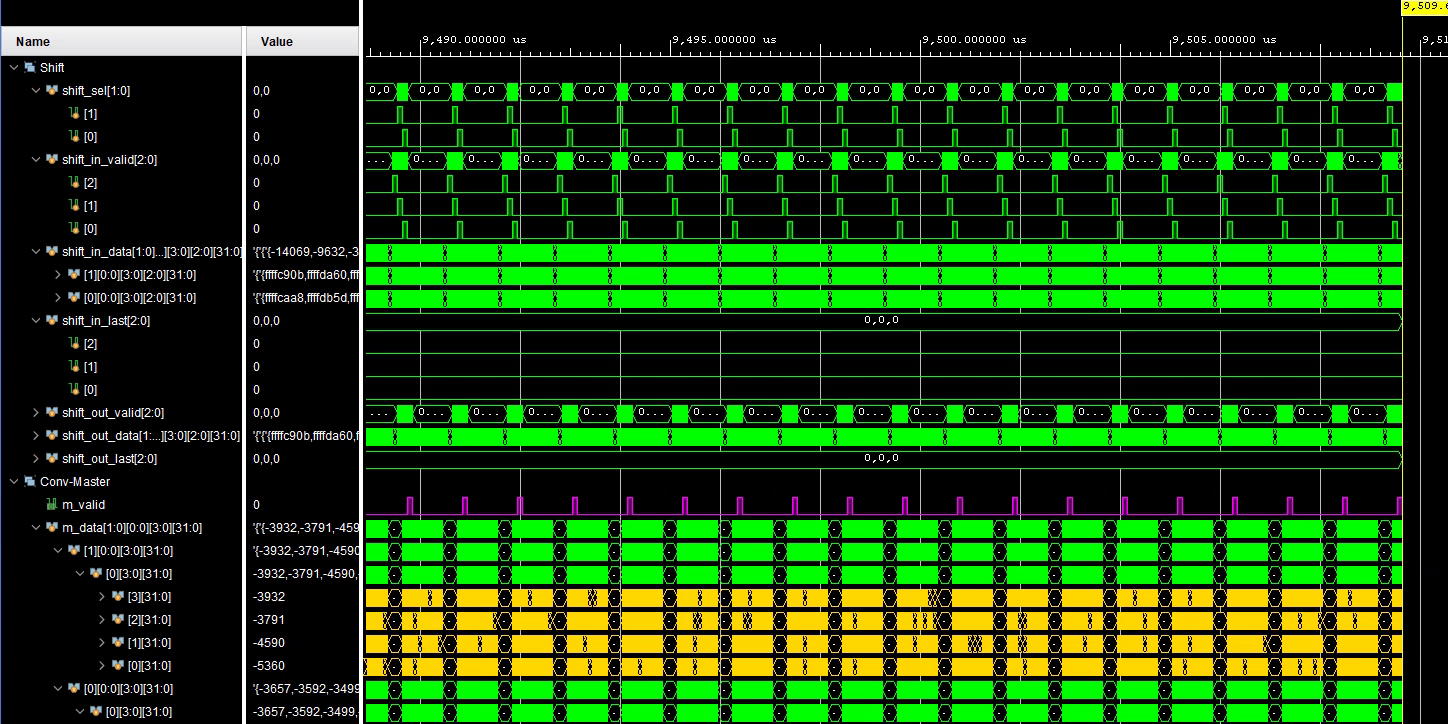

- Generate Test Vectors: using Python Notebooks

- Testbenches: SystemVerilog OOP testbenches to read the input vector (txt file), randomly control the valid & ready signals and get output vectors (txt files)

- Debug: Python notebooks to compare the expected output with simulation output and to find which dimensions have errors.

- Microsoft Excel: I manually simulate the values in wires with excel to debug

- Repeat 3-8: For every module & every level of integration

- ASIC Synthesis

4/4: System-on-Chip Integration & Firmware Development

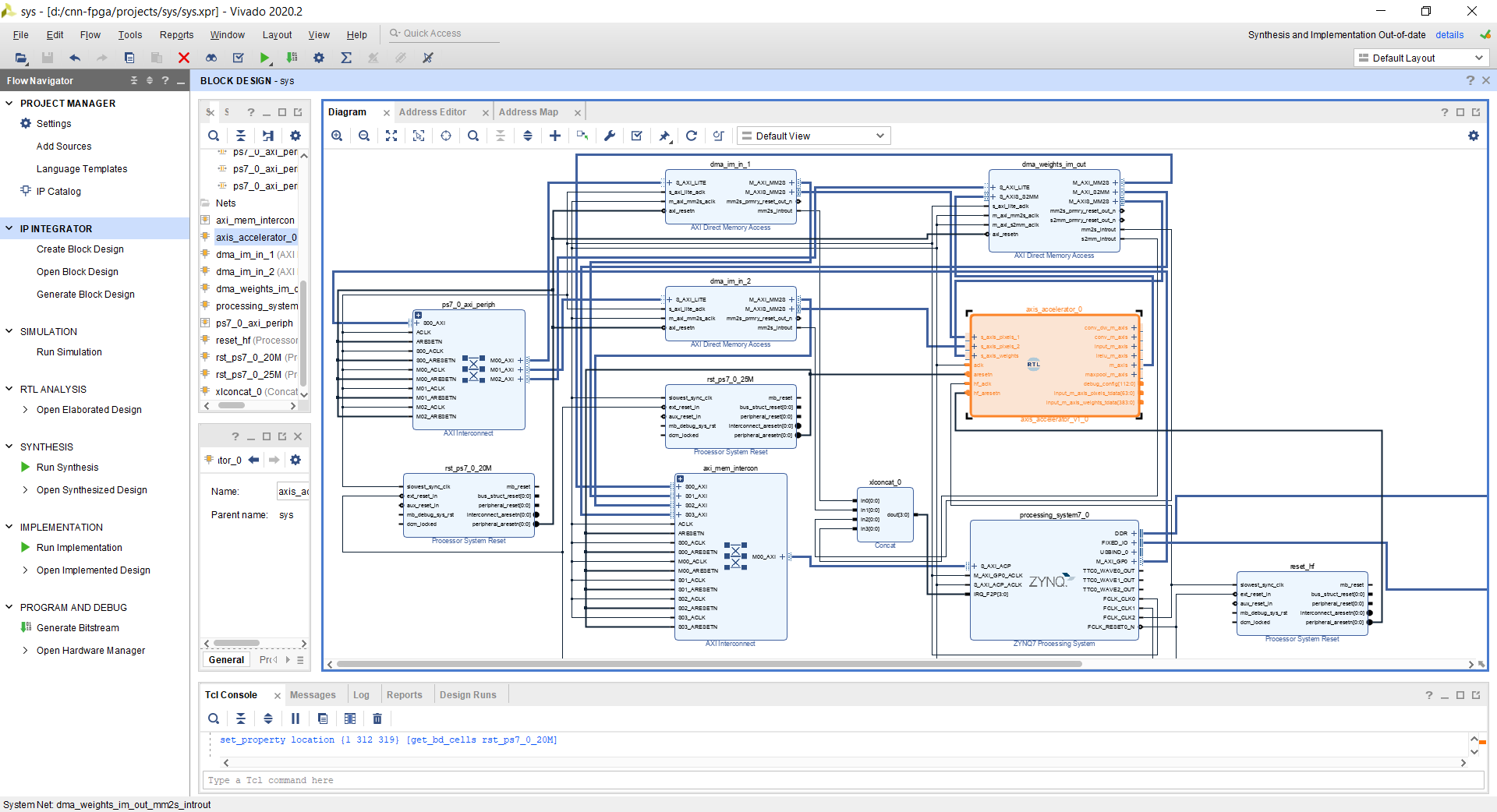

- SoC Block Design: Build FPGA projects with Vivado manually and synthesize

- Automation: TCL scripts to automate the project building and configuration

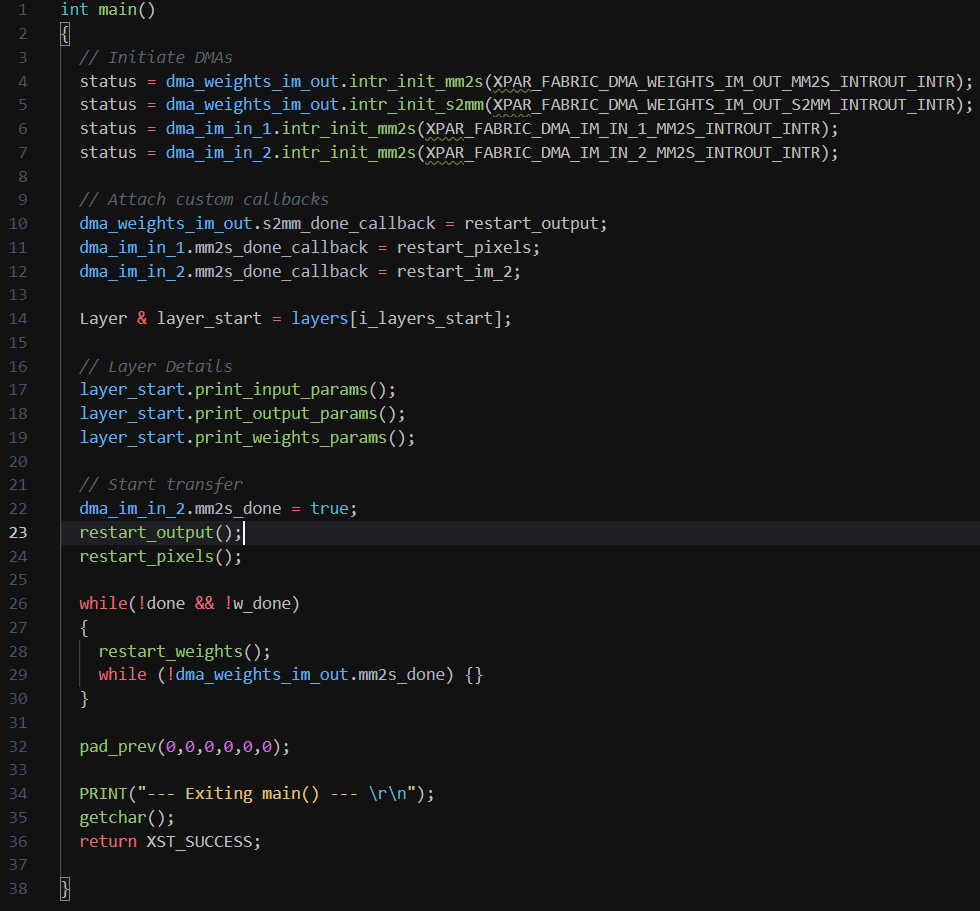

- C++ Firmware: To control the custom modules

- Hardware Verification: Test on FPGA, compare output to golden model

- Repeat 11-14

Directory Structure

Simpler FPGA/ASIC projects can be done within a single folder. However, as the scope and complexity of the project grow, the need to work with multiple languages (Python, SystemVerilog, C, TCL...) and tools (Jupyter, Vivado, SDK, Genus, Innovus...) can grow out of control.

The project needs to be version-controlled (git) as well, to prevent data loss and to move between different stages of development like a time machine. However, FPGA and ASIC tools create a lot of internal files, which do not generalize between machines. Therefore, the building of such projects is automated via TCL scripts, and only such scripts and the source files are git-tracked.

The following is the structure I developed through my Kraken project.

kraken

│

├── hdl

│ ├── src : rtl designs (SystemVerilog/Verilog)

│ ├── tb : SystemVerilog testbenches

│ ├── include : V/SV files with macros

│ └── external : open-source SV/V libraries

│

├── fpga

│ ├── scripts : TCL scripts to build & configure Vivado projects from source

│ ├── projects : Vivado projects [not git tracked]

│ └── wave : waveform scripts (wcfg)

│

├── asic

│ ├── scripts : TCL scripts for synth, p&r from source

│ ├── reports : reports from asic tools

│ ├── work : working folder for ASIC tools, [not git tracked]

│ ├── log : [not git tracked]

│ └── pdk : technology node files, several GBs [not git tracked]

│

├── python

│ ├── dnns : TensorFlow, Torch, TfLite extraction

│ ├── framework : Custom framework

│ └── golden : Golden models

│

├── data : [not git tracked]

│ ├── input : input test vectors, text files, generated by python scripts

│ ├── output_exp : expected output vectors

│ ├── output_sim : output vectors from hardware simulation

│ └── output_fpga: output vectors from FPGA

│

├── cpp

│ ├── src : C++ firmware for the controller

│ ├── include : header files

│ └── external : external libraries

│

└── doc : documentation: excel files, drawings...Next: